Sagemaker pytorch

With MMEs, sagemaker pytorch, you can host multiple models on a single serving container and host all the models behind a single endpoint.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers. Trainer class.

Sagemaker pytorch

.

He is passionate about model optimization and model serving, with experience ranging from RTL verification, embedded software, computer vision, to PyTorch.

.

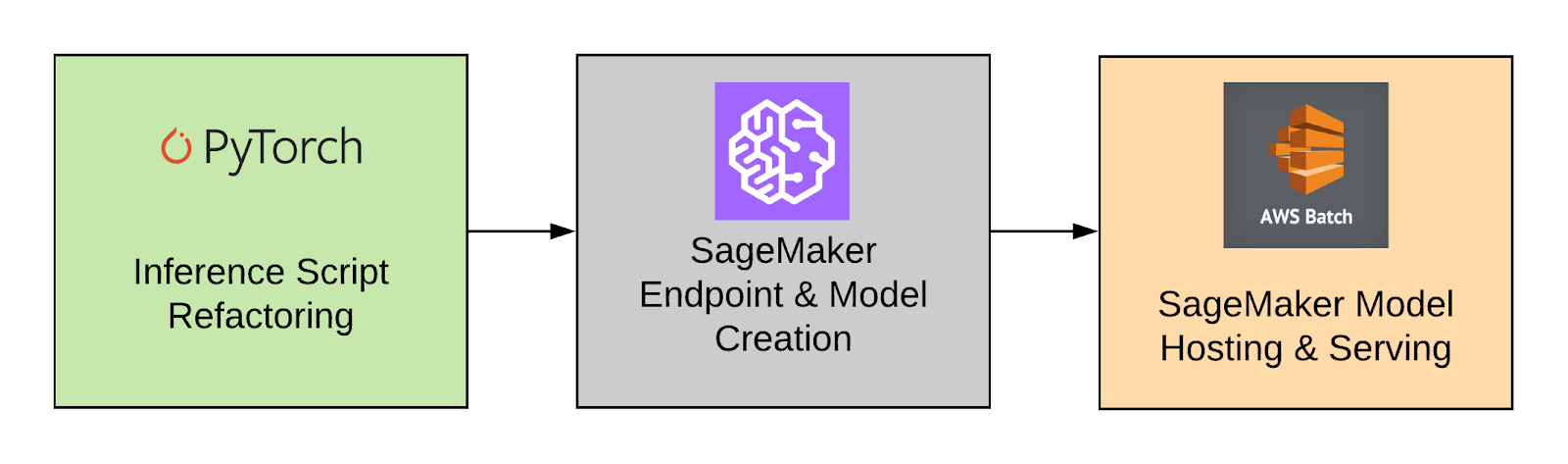

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers. However, the ML experimentation process can be tedious—there are a lot of approaches requiring a significant amount of time to implement. Amazon SageMaker provides a unified interface to experiment with different ML models, and the PyTorch Model Zoo allows us to easily swap our models in a standardized manner. Setting up these ML models as a SageMaker endpoint or SageMaker Batch Transform job for online or offline inference is easy with the steps outlined in this blog post. We will use a Faster R-CNN object detection model to predict bounding boxes for pre-defined object classes. Finally, we will deploy the ML model, perform inference on it using SageMaker Batch Transform, and inspect the ML model output and learn how to interpret the results. This solution can be applied to any other pre-trained model on the PyTorch Model Zoo. For a list of available models, see the PyTorch Model Zoo documentation.

Sagemaker pytorch

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker. Just like with those frameworks, now you can write your PyTorch script like you normally would and rely on Amazon SageMaker training to handle setting up the distributed training cluster, transferring data, and even hyperparameter tuning. On the inference side, Amazon SageMaker provides a managed, highly available, online endpoint that can be automatically scaled up as needed. Supporting many deep learning frameworks is important to developers, since each of the deep learning frameworks has unique areas of strength. PyTorch is a framework used heavily by deep learning researchers, but it is also rapidly gaining popularity among developers due its flexibility and ease of use. TensorFlow is well established and continues to add great features with each release. The PyTorch framework is unique.

Mql4

Did this page help you? Javascript is disabled or is unavailable in your browser. For a complete list of parameters, refer to the GitHub repo. After you are done, please follow the instructions in the cleanup section of the notebook to delete the resources provisioned in this post to avoid unnecessary charges. Thanks for letting us know we're doing a good job! TrainingArgument class to achieve this. Please refer to your browser's Help pages for instructions. With all of these changes, you should be able to launch distributed training with any PyTorch model without the Transformer Trainer API. You don't need to change your code when you use the transformers. This function accepts the index argument to indicate the rank of the current GPU in the cluster for distributed training. Tutorials Get in-depth tutorials for beginners and advanced developers View Tutorials. Thanks for letting us know this page needs work.

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more.

He is passionate about model optimization and model serving, with experience ranging from RTL verification, embedded software, computer vision, to PyTorch. Recently, generative AI applications have captured widespread attention and imagination. The following code is an example of the skeleton folder for the SD model:. Thanks for letting us know this page needs work. If you're not using AMP, replace optimizer. Import the optimization libraries. Thanks for letting us know we're doing a good job! The following table summarizes the differences between single-model and multi-model endpoints for this example. The detailed illustration of this user flow is demonstrated below. After confirming the correct object selection, you can send the original and mask images to a second model for removal. You can observe the benefit from improved instance saturation, which ultimately leads to cost savings. The example we shared illustrates how we can use resource sharing and simplified model management with SageMaker MMEs while still utilizing TorchServe as our model serving stack. Replace torch.

0 thoughts on “Sagemaker pytorch”