Nn crossentropyloss

It is useful when training a classification problem with C classes.

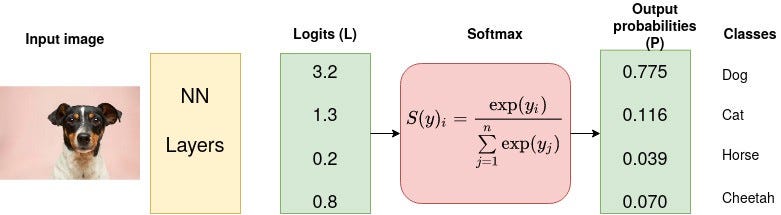

Learn the fundamentals of Data Science with this free course. In machine learning classification issues, cross-entropy loss is a frequently employed loss function. The difference between the projected probability distribution and the actual probability distribution of the target classes is measured by this metric. The cross-entropy loss penalizes the model more when it is more confident in the incorrect class, which makes intuitive sense. The cross-entropy loss will be substantial — for instance, if the model forecasts a low probability for the right class but a high probability for the incorrect class. In this simple example, we have x as the predicted probability distribution, y is the true probability distribution represented as a one-hot encoded vector , log is the natural logarithm, and sum is taken over all classes.

Nn crossentropyloss

I am trying to compute the cross entropy loss of a given output of my network. Can anyone help me? I am really confused and tried almost everything I could imagined to be helpful. This is the code that i use to get the output of the last timestep. I don't know if there is a simpler solution. If it is, i'd like to know it. This is my forward. Yes, by default the zero padded timesteps targets matter. However, it is very easy to mask them. You have two options, depending on the version of PyTorch that you use. PyTorch 0. For example, in language modeling or seq2seq, where i add zero padding, i mask the zero padded words target simply like this:. You may be also interested in this discussion.

Contact Us. NLLLoss functions to compute the loss in a numerically stable way. FloatTensor of size 1x10] and the desired label, which is of the form nn crossentropyloss lab Variable containing: x [torch.

.

Introduction to PyTorch on YouTube. Deploying PyTorch Models in Production. Parallel and Distributed Training. Click here to download the full example code. Deep learning consists of composing linearities with non-linearities in clever ways. The introduction of non-linearities allows for powerful models. In this section, we will play with these core components, make up an objective function, and see how the model is trained. PyTorch and most other deep learning frameworks do things a little differently than traditional linear algebra. It maps the rows of the input instead of the columns. Look at the example below.

Nn crossentropyloss

The cross-entropy loss function is an important criterion for evaluating multi-class classification models. This tutorial demystifies the cross-entropy loss function, by providing a comprehensive overview of its significance and implementation in deep learning. Loss functions are essential for guiding model training and enhancing the predictive accuracy of models. The cross-entropy loss function is a fundamental concept in classification tasks , especially in multi-class classification. The tool allows you to quantify the difference between predicted probabilities and the actual class labels. Entropy is based on information theory, measuring the amount of uncertainty or randomness in a given probability distribution. You can think of it as measuring how uncertain we are about the outcomes of a random variable, where high entropy indicates more randomness while low entropy indicates more predictability.

Yrkkh 8 jan 2023 written update

Note that for some losses, there are multiple elements per sample. Learn in-demand tech skills in half the time. I am trying to compute the cross entropy loss of a given output of my network. PyTorch 0. I am trying to compute the cross entropy loss of a given output of my network print output Variable containing: 1. Blog For developers, By developers. Generative AI. This is the code that i use to get the output of the last timestep. Learn to Code. Probabilities for each class; useful when labels beyond a single class per minibatch item are required, such as for blended labels, label smoothing, etc. Compute the softmax probabilities manually.

It is useful when training a classification problem with C classes.

If reduction is not 'none' default 'mean' , then. This class combines the nn. For example, in language modeling or seq2seq, where i add zero padding, i mask the zero padded words target simply like this:. Skill Paths Achieve learning goals. Courses Level up your skills. Default: 'mean'. The performance of this criterion is generally better when target contains class indices, as this allows for optimized computation. Webinars Sessions with our global developer community. Earn Referral Credits. The PyTorch cross-entropy loss can be defined as:. I don't know if there is a simpler solution. If given, has to be a Tensor of size C and floating point dtype. Frequently Asked Questions. Conclusion To summarize, cross-entropy loss is a popular loss function in deep learning and is very effective for classification tasks.

I apologise, but, in my opinion, you are mistaken. Let's discuss it. Write to me in PM, we will communicate.

I consider, that you are not right. I am assured. I suggest it to discuss. Write to me in PM.