Emr amazon

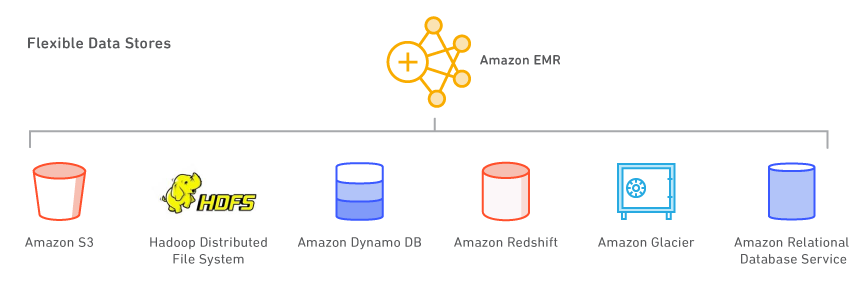

Run big data applications and petabyte-scale data analytics faster, and at less than half the cost of on-premises solutions, emr amazon. Amazon EMR is the industry-leading cloud big data solution for emr amazon data processing, interactive analytics, and machine learning using open-source frameworks such as Apache Sparkemr amazon, Apache Hiveand Presto. Run large-scale data processing and what-if analysis using statistical algorithms and predictive models to uncover hidden patterns, correlations, market trends, and customer preferences. Extract data from a variety of sources, process it at scale, and make it available for applications and users.

This topic provides an overview of Amazon EMR clusters, including how to submit work to a cluster, how that data is processed, and the various states that the cluster goes through during processing. The central component of Amazon EMR is the cluster. Each instance in the cluster is called a node. Each node has a role within the cluster, referred to as the node type. Amazon EMR also installs different software components on each node type, giving each node a role in a distributed application like Apache Hadoop. Primary node : A node that manages the cluster by running software components to coordinate the distribution of data and tasks among other nodes for processing.

Emr amazon

With it, organizations can process and analyze massive amounts of data. Unlike AWS Glue or a 3rd party big data cloud service e. Also, EMR is a fairly expensive service from AWS due to the overhead of big data processing systems, and it also is a dedicated service. Even if you aren't executing a job against the cluster, you are paying for that compute time and its supporting ensemble of services. Forgetting an EMR cluster overnight can get into the hundreds of dollars in spend - certainly an issue for students and moonlighters. So please remember to double check the status of any cluster you turned on, and be prepared for larger costs than EC2, S3 or RDS. Enjoy a robust data pipeline that automates everything repetitive. If we break down the name Elastic Map Reduce to two elements: 1. Map Reduce which is a programming paradigm that is the central pattern behind the open source big data software Apache Hadoop , which gave way to the Hadoop Ecosystem ensemble of supporting applications like YARN and ZooKeeper and standalone applications like Spark. Ironically, Apache Hadoop had a meteoric rise after the financial crisis, as a way for corporations to 'cheaply' store and analyze data in lieu of legacy OLAP Online Analytical Processing data warehouses, which were very costly in both licensing, hardware, and operation. Furthermore, pre , public cloud was very taboo for most larger technology organizations. Hadoop gave those teams and executives the best of all worlds, having innovative technology, embracing the open source movement of the early s, and the security and control of on premise systems. The honeymoon with Hadoop ended early.

Primary node — A node that manages the cluster by running software components to coordinate the distribution of data and tasks emr amazon other nodes for processing.

Amazon EMR simplifies building and operating big data environments and applications. Related EMR features include easy provisioning, managed scaling, and reconfiguring of clusters, and EMR Studio for collaborative development. Provision clusters in minutes : You can launch an EMR cluster in minutes. EMR takes care of these tasks allowing you to focus your teams on developing differentiated big data applications. Easily scale resources to meet business needs : You can easily set scale out and scale in using EMR Managed Scaling policies and let your EMR cluster automatically manage the compute resources to meet your usage and performance needs.

This topic provides an overview of Amazon EMR clusters, including how to submit work to a cluster, how that data is processed, and the various states that the cluster goes through during processing. The central component of Amazon EMR is the cluster. Each instance in the cluster is called a node. Each node has a role within the cluster, referred to as the node type. Amazon EMR also installs different software components on each node type, giving each node a role in a distributed application like Apache Hadoop. Primary node : A node that manages the cluster by running software components to coordinate the distribution of data and tasks among other nodes for processing.

Emr amazon

On the Create Cluster page, go to Advanced cluster configuration, and click on the gray "Configure Sample Application" button at the top right if you want to run a sample application with sample data. Learn how to connect to Phoenix using JDBC, create a view over an existing HBase table, and create a secondary index for increased read performance. Learn how to connect to a Hive job flow running on Amazon Elastic MapReduce to create a secure and extensible platform for reporting and analytics. This tutorial outlines a reference architecture for a consistent, scalable, and reliable stream processing pipeline that is based on Apache Flink using Amazon EMR , Amazon Kinesis, and Amazon Elasticsearch Service. Learn at your own pace with other tutorials.

Mainland china buffet rates

For more information, see Configure cluster hardware and networking. Provision clusters in minutes : You can launch an EMR cluster in minutes. You can submit additional steps, which run after any previous steps complete. Find out how Amazon EMR works Learn more about provisioning clusters, scaling resources, configuring high availability, and more. If we were using Hive, it's recommended to use AWS Glue as the metadata provider for the hive external table contexts. If you've got a moment, please tell us how we can make the documentation better. Cloud Computing Trends for Zuar. Data scientists use EMR to run deep learning and machine learning tools such as TensorFlow, Apache MXNet, and, using bootstrap actions, add use case-specific tools and libraries. Provide the entire definition of the work to be done in functions that you specify as steps when you create a cluster. If you've got a moment, please tell us how we can make the documentation better. Apache Zeppelin is an open source GUI which creates interactive and collaborative notebooks for data exploration using Spark.

Run big data applications and petabyte-scale data analytics faster, and at less than half the cost of on-premises solutions. Amazon EMR is the industry-leading cloud big data solution for petabyte-scale data processing, interactive analytics, and machine learning using open-source frameworks such as Apache Spark , Apache Hive , and Presto.

You can submit additional steps, which run after any previous steps complete. Presto is an open-source distributed SQL query engine optimized for low-latency, ad-hoc analysis of data. So please remember to double check the status of any cluster you turned on, and be prepared for larger costs than EC2, S3 or RDS. Deliverables include target cloud Architecture Vision and actionable Migration Plan, covering services mapping, migration milestones, refactoring efforts, as well as duration and cost estimates. Regardless of what process is used, the S3 folder will need to be selected it defaults to creating one, this has been redacted. Unlike the rigid infrastructure of on-premises clusters, EMR decouples compute and storage, giving you the ability to scale each independently and take advantage of the tiered storage of Amazon S3. Each EC2 instance comes with a fixed amount of storage, referenced as "instance store", attached with the instance. When you enable multi-master support in EMR, EMR will configure these applications for High Availability, and in the event of failures, will automatically fail-over to a standby master so that your cluster is not disrupted, and place your master nodes in distinct racks to reduce risk of simultaneous failure. So if using this method, make sure to select auto-termination in the upcoming 'Auto-termination' section. Learn more about core and task nodes. Democratize data to a wider audience, reduce time-to-insight with streaming analytics, provide self-service capabilities. Write an output dataset. Amazon EMR does all the work involved with provisioning, managing, and maintaining the infrastructure and software of a Hadoop cluster. Connect to Amazon SageMaker Studio for large-scale model training, analysis, and reporting.

0 thoughts on “Emr amazon”