Yolo-nas

As usual, yolo-nas, we have prepared a Google Colab that you can open in a separate tab and follow our tutorial step by step. Before we start training, we need to prepare yolo-nas Python environment.

This Pose model offers an excellent balance between latency and accuracy. Pose Estimation plays a crucial role in computer vision, encompassing a wide range of important applications. These applications include monitoring patient movements in healthcare, analyzing the performance of athletes in sports, creating seamless human-computer interfaces, and improving robotic systems. Instead of first detecting the person and then estimating their pose, it can detect and estimate the person and their pose all at once, in a single step. Both the Object Detection models and the Pose Estimation models have the same backbone and neck design but differ in the head. It navigates the vast architecture search space and returns the best architectural designs.

Yolo-nas

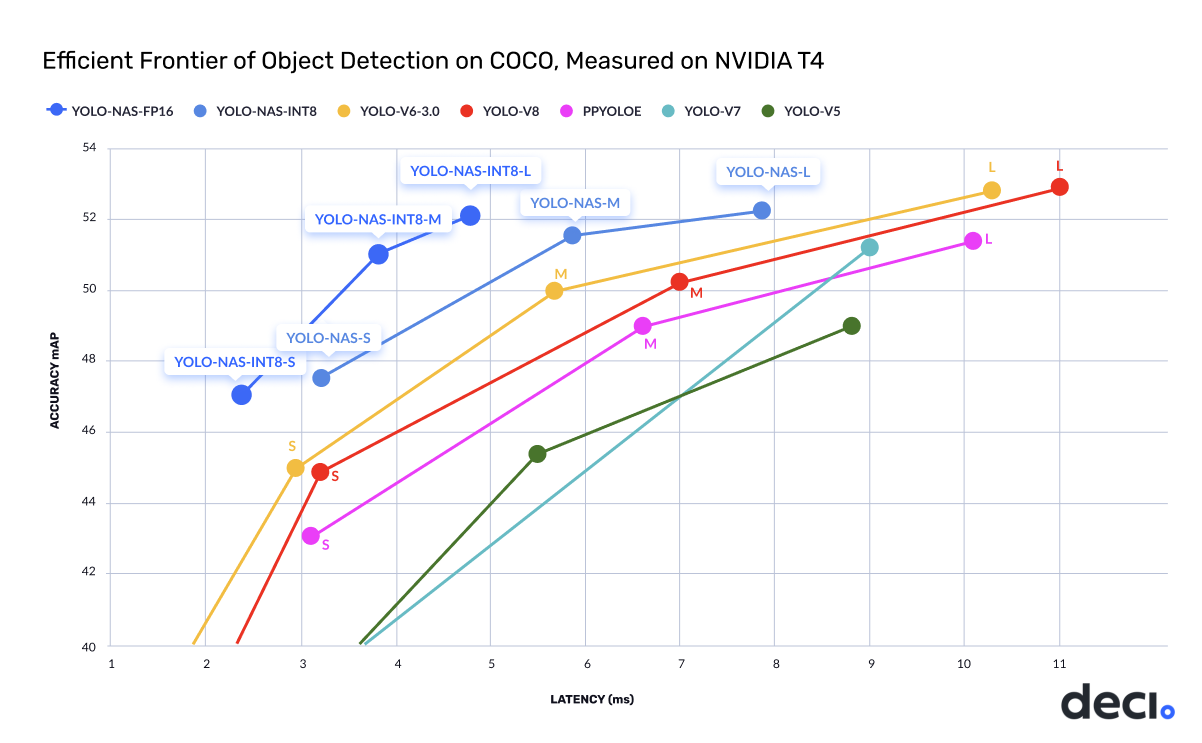

Develop, fine-tune, and deploy AI models of any size and complexity. The model successfully brings notable enhancements in areas such as quantization support and finding the right balance between accuracy and latency. This marks a significant advancement in the field of object detection. YOLO-NAS includes quantization blocks which involves converting the weights, biases, and activations of a neural network from floating-point values to integer values INT8 , resulting in enhanced model efficiency. The transition to its INT8 quantized version results in a minimal precision reduction. This has marked as a major improvement when compared to other YOLO models. These small enhancements resulted in an exceptional architecture, delivering unique object detection capabilities and outstanding performance. The article begins with a concise exploration of the model's architecture, followed by an in-depth explanation of the Auto NAC concept. This effort has significantly expanded the capabilities of real-time object detection, pushing the boundaries of what's possible in the field. NAS models undergoes pre-training on the Object dataset, consisting of categories with a vast collection of 2 million images and 30 million bounding boxes. Subsequently, they undergo training on , pseudo-labeled images extracted from Coco unlabeled images. The training process is further enriched through the integration of knowledge distillation and Distribution Focal Loss DFL.

If you yolo-nas have a dataset in YOLO format, feel free to use it. Cite this Post Use the following entry to cite this post in your research: Piotr Skalski, yolo-nas.

It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance. These models are designed to deliver top-notch performance in terms of both speed and accuracy.

It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance. These models are designed to deliver top-notch performance in terms of both speed and accuracy. Choose from a variety of options tailored to your specific needs:. Each model variant is designed to offer a balance between Mean Average Precision mAP and latency, helping you optimize your object detection tasks for both performance and speed. The package provides a user-friendly Python API to streamline the process. For handling inference results see Predict mode.

Yolo-nas

Easily train or fine-tune SOTA computer vision models with one open source training library. The home of Yolo-NAS. Build, train, and fine-tune production-ready deep learning SOTA vision models. Easily load and fine-tune production-ready, pre-trained SOTA models that incorporate best practices and validated hyper-parameters for achieving best-in-class accuracy. For more information on how to do it go to Getting Started. More examples on how and why to use recipes can be found in Recipes.

Ppsspp gold free download for pc

Add speed and simplicity to your Machine Learning workflow today. Sign up FREE. Download the code. Subscribe to our newsletter Stay updated with Paperspace Blog by signing up for our newsletter. Post Title. Develop, fine-tune, and deploy AI models of any size and complexity. Get started Talk to an expert. And then, load this model to the GPU device, if available. Without further ado, let's get started! Stay updated with Paperspace Blog by signing up for our newsletter. Get Started with OpenCV. Sample projects you can clone into your account. View the docs hub and tutorials. Skip to content. Remember that the model is still being actively developed.

Developing a new YOLO-based architecture can redefine state-of-the-art SOTA object detection by addressing the existing limitations and incorporating recent advancements in deep learning.

The nano model is the fastest and reaches inference up to fps on a T4 GPU. To maintain the stability of the environment, it is a good idea to pin a specific version of the package. It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. Find the right solution for your organization. The training process is further enriched through the integration of knowledge distillation and Distribution Focal Loss DFL. Piotr Skalski. Sign In. The A, equipped with Tensor Cores, contributes to faster and more efficient processing, rendering the A particularly well-suited for deep learning applications. Professional Services. Below is a detailed overview of each model, including links to their pre-trained weights, the tasks they support, and their compatibility with different operating modes.

What necessary words... super, excellent idea

Thanks for an explanation, I too consider, that the easier, the better �