Whisper github

Stable: v1. The entire high-level implementation of the model is contained in whisper.

If you have questions or you want to help you can find us in the audio-generation channel on the LAION Discord server. An Open Source text-to-speech system built by inverting Whisper. Previously known as spear-tts-pytorch. We want this model to be like Stable Diffusion but for speech — both powerful and easily customizable. We are working only with properly licensed speech recordings and all the code is Open Source so the model will be always safe to use for commercial applications. Currently the models are trained on the English LibreLight dataset. In the next release we want to target multiple languages Whisper and EnCodec are both multilanguage.

Whisper github

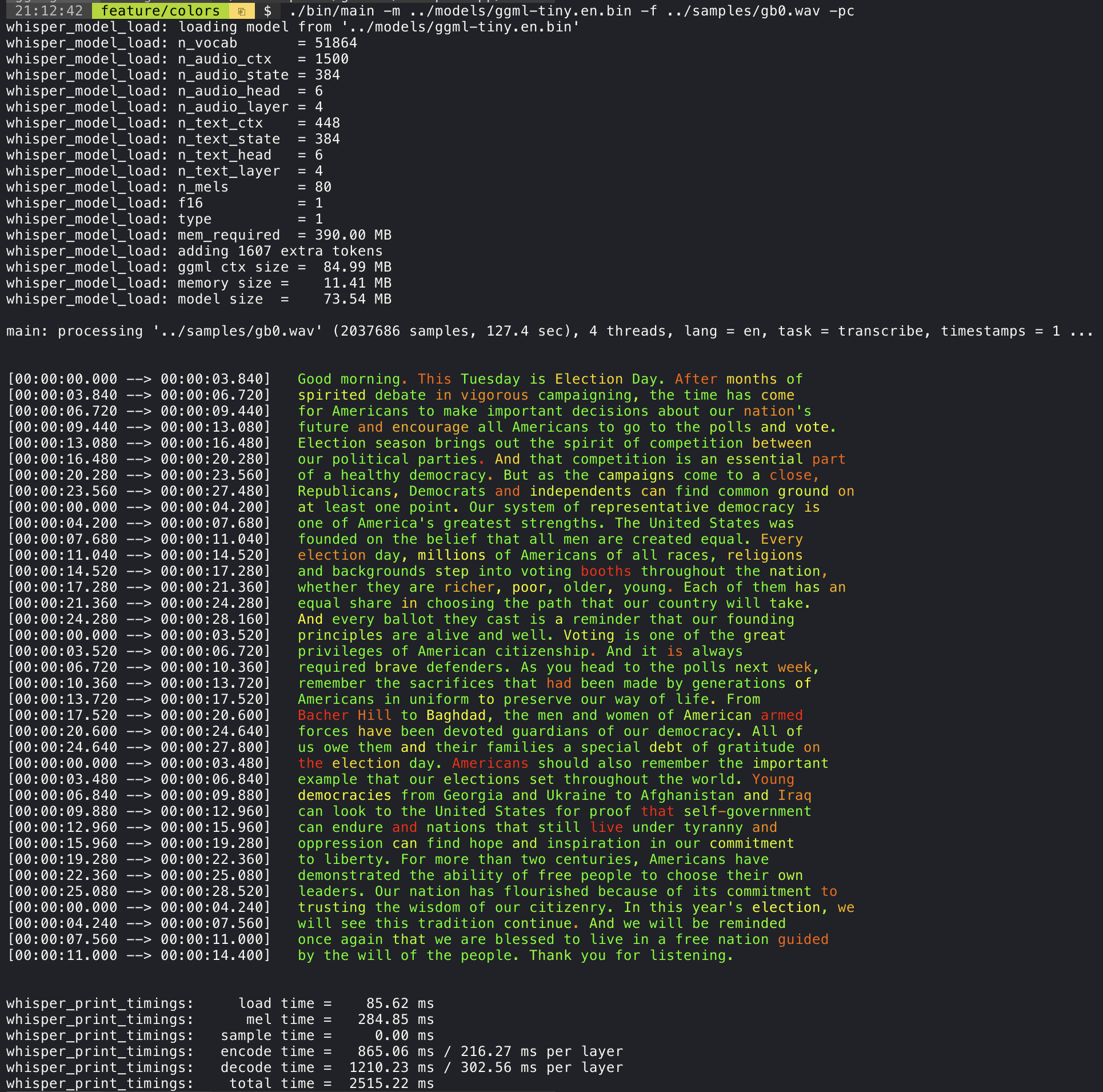

This repository provides fast automatic speech recognition 70x realtime with large-v2 with word-level timestamps and speaker diarization. Whilst it does produces highly accurate transcriptions, the corresponding timestamps are at the utterance-level, not per word, and can be inaccurate by several seconds. OpenAI's whisper does not natively support batching. Phoneme-Based ASR A suite of models finetuned to recognise the smallest unit of speech distinguishing one word from another, e. A popular example model is wav2vec2. Forced Alignment refers to the process by which orthographic transcriptions are aligned to audio recordings to automatically generate phone level segmentation. Speaker Diarization is the process of partitioning an audio stream containing human speech into homogeneous segments according to the identity of each speaker. Please refer to the CTranslate2 documentation. See other methods here. You may also need to install ffmpeg, rust etc. It is due to dependency conflicts between faster-whisper and pyannote-audio 3. Please see this issue for more details and potential workarounds. Compare this to original whisper out the box, where many transcriptions are out of sync:.

To transcribe an audio file containing non-English speech, you can specify the language using the --language option:.

A nearly-live implementation of OpenAI's Whisper, using sounddevice. Requires existing Whisper install. The main repo for Stage Whisper — a free, secure, and easy-to-use transcription app for journalists, powered by OpenAI's Whisper automatic speech recognition ASR machine learning models. The application is built using Nuxt, a Javascript framework based on Vue. Production-ready audio and video transcription app that can run on your laptop or in the cloud. Add a description, image, and links to the openai-whisper topic page so that developers can more easily learn about it.

Released: Nov 17, View statistics for this project via Libraries. Whisper is a general-purpose speech recognition model. It is trained on a large dataset of diverse audio and is also a multitasking model that can perform multilingual speech recognition, speech translation, and language identification. A Transformer sequence-to-sequence model is trained on various speech processing tasks, including multilingual speech recognition, speech translation, spoken language identification, and voice activity detection. These tasks are jointly represented as a sequence of tokens to be predicted by the decoder, allowing a single model to replace many stages of a traditional speech-processing pipeline. The multitask training format uses a set of special tokens that serve as task specifiers or classification targets. We used Python 3.

Whisper github

Whisper is a pre-trained model for automatic speech recognition ASR and speech translation. Trained on k hours of labelled data, Whisper models demonstrate a strong ability to generalise to many datasets and domains without the need for fine-tuning. The original code repository can be found here. Whisper large-v3 has the same architecture as the previous large models except the following minor differences:.

Tantrum clipart

Improve this page Add a description, image, and links to the openai-whisper topic page so that developers can more easily learn about it. Unofficial Deno wrapper for the Open Ai api. WhisperX This repository provides fast automatic speech recognition 70x realtime with large-v2 with word-level timestamps and speaker diarization. You signed in with another tab or window. MIT license. Latest commit History Commits. The tool simply runs the Encoder part of the model and prints how much time it took to execute it. The main example provides support for output of karaoke-style movies, where the currently pronounced word is highlighted. The multitask training format uses a set of special tokens that serve as task specifiers or classification targets. We are working only with properly licensed speech recordings and all the code is Open Source so the model will be always safe to use for commercial applications. You switched accounts on another tab or window. You may also need to install ffmpeg, rust etc. Go to file. Contributors 9. Skip to content.

OpenAI explains that Whisper is an automatic speech recognition ASR system trained on , hours of multilingual and multitask supervised data collected from the Web. Text is easier to search and store than audio. However, transcribing audio to text can be quite laborious.

Older progress updates are archived here. A Transformer sequence-to-sequence model is trained on various speech processing tasks, including multilingual speech recognition, speech translation, spoken language identification, and voice activity detection. Updated Dec 28, Python. This can result in significant speed-up - more than x3 faster compared with CPU-only execution. Reload to refresh your session. We gave two presentation diving deeper into WhisperSpeech. Updated Dec 1, Pascal. Updated Feb 23, Go. Here is another example of transcribing a min speech in about half a minute on a MacBook M1 Pro, using medium. Our journey into space will go on. You signed in with another tab or window.

Certainly. And I have faced it. We can communicate on this theme. Here or in PM.