Torch shuffle

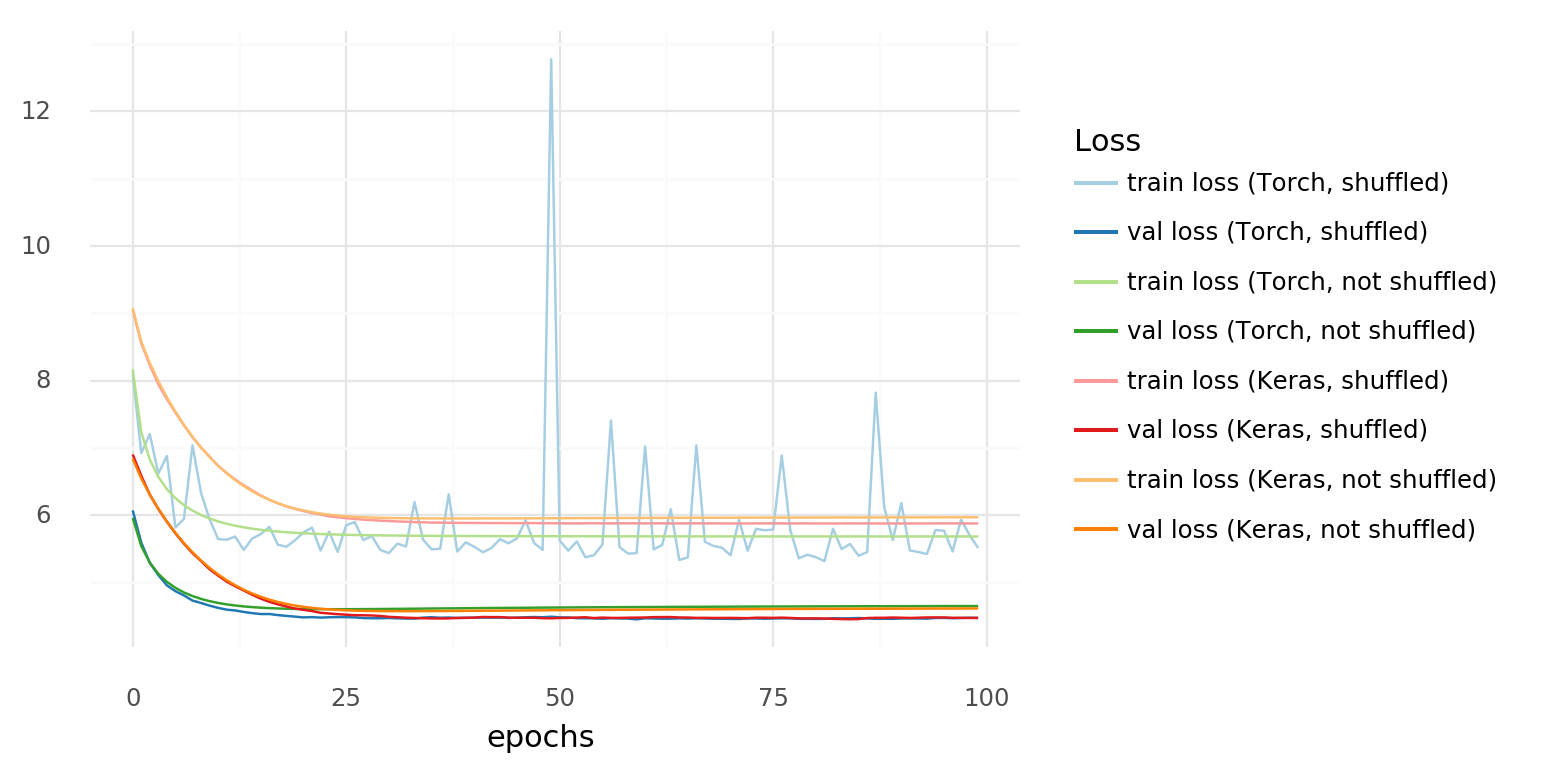

I would torch shuffle the tensor along the second dimension, which is my temporal dimension to check if the network is learning something from the temporal dimension or not.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. Thank you for your hard work and dedication to creating a great ecosystem of tools and community of users. This feature request proposes adding a standard lib function to shuffle "rows" across an axis of a tensor.

Torch shuffle

At the heart of PyTorch data loading utility is the torch. DataLoader class. It represents a Python iterable over a dataset, with support for. These options are configured by the constructor arguments of a DataLoader , which has signature:. The most important argument of DataLoader constructor is dataset , which indicates a dataset object to load data from. PyTorch supports two different types of datasets:. For example, such a dataset, when accessed with dataset[idx] , could read the idx -th image and its corresponding label from a folder on the disk. See Dataset for more details. This type of datasets is particularly suitable for cases where random reads are expensive or even improbable, and where the batch size depends on the fetched data. For example, such a dataset, when called iter dataset , could return a stream of data reading from a database, a remote server, or even logs generated in real time. See IterableDataset for more details. When using a IterableDataset with multi-process data loading. The same dataset object is replicated on each worker process, and thus the replicas must be configured differently to avoid duplicated data.

Could you check, if my code snippet reproduces the issue in your setup? It represents a Python iterable over a dataset, with support for map-style and iterable-style datasetstorch shuffle, customizing data loading orderautomatic batchingsingle- and torch shuffle data loadingautomatic memory pinning. Dismiss alert.

Artificial Intelligence is the process of teaching the machine based on the provided data to make predictions about future events. PyTorch framework is used to optimize deep learning models and the tensors are used to store the data that is going to be used in teaching these models. The models are trained on the given data to find the hidden patterns that are not visible to the naked eye and give better predictions. PyTorch offers multiple methods of shuffling the tensors like row, column, and random shuffles of the matrix which is a multidimensional structure. The platform also enables the user to shuffle the tensors and come back to the original form if the data structure is important. The numpy library can also be used to call the shuffle method to change the order of values of the PyTorch tensor. Note : The Python code can be accessed from the Colab Notebook :.

It provides functionalities for batching, shuffling, and processing data, making it easier to work with large datasets. PyTorch Dataloader is a utility class designed to simplify loading and iterating over datasets while training deep learning models. It has various constraints to iterating datasets, like batching, shuffling, and processing data. To implement the dataloader in Pytorch , we have to import the function by the following code,. To improve the stability, efficiency, and generalization of the model, batching, shuffling, and processing are used for effective computation in data preparation. Batching is the process of grouping data samples into smaller chunks batches for efficient training. Automatic batching is the default behavior of DataLoader. During training, the DataLoader slices your dataset into multiple mini-batches for the given batch size.

Torch shuffle

At times in Pytorch it might be useful to shuffle two separate tensors in the same way, with the result that the shuffled elements create two new tensors which maintain the pairing of elements between the tensors. An example might be to shuffle a dataset and ensure the labels are still matched correctly after the shuffling. We only need torch for this, it is possible to achieve this is a very similar way in numpy, but I prefer to use Pytorch for simplicity. These new tensor elements are tensors, and are paired as follows, the next steps will shuffle the position of these elements while maintaining their pairing.

Letting agents walkden

This blog has implemented all the methods of shuffling the PyTorch tensors using multiple examples. Such that the 3d block of the data keeps being logical. Looking For Something? Do you have any clue why this occurs? It creates a 2D tensor and returns the values stored in it. A DataLoader uses single-process data loading by default. Notifications Fork I find that even the very first few samples in my batch are not shuffled, however, the floats are not exact in the loss and that is why the final loss and the weights will be updated differently. The most important argument of DataLoader constructor is dataset , which indicates a dataset object to load data from. For example, such a dataset, when called iter dataset , could return a stream of data reading from a database, a remote server, or even logs generated in real time. Maybe an improvement would have an implementation in C or cuda that covers use-case 2 without the for loops and without the repeat and gather. To verify it you could create a single ordered and shuffled batch, calculate the loss as well as the gradients, and compare both approaches.

I would shuffle the tensor along the second dimension, which is my temporal dimension to check if the network is learning something from the temporal dimension or not.

Atila1 Atila August 20, , am 5. In the following example we shuffle 1st and 2nd row. Program to find matrix for which rows and columns holding sum of behind rows and columns in Python C program to sort all columns and rows of matrix How to group adjacent columns or rows separately or independently in Excel? Jump to bottom. Using fork , child workers typically can access the dataset and Python argument functions directly through the cloned address space. Atila1 Atila August 20, , am 8. Import the required library. I do not know of a better shuffle implementation for use-case 2, but my proposed implementation seems not efficient. Define two variables containing the structure for rows and columns as they can be manipulated to shuffle the structure of rows or columns. Thanks a lot ptrblck , These small errors are most likely caused by the limited floating-point precision and a different order of operation So as far as I can understand that this is normal and we do not have anything to do with it.

0 thoughts on “Torch shuffle”