Taskflow api

Ask our custom GPT trained on the documentation and community troubleshooting of Airflow.

Ask our custom GPT trained on the documentation and community troubleshooting of Airflow. This approach reduces boilerplate and enhances code readability. For tasks with complex dependencies or requirements, Airflow 2. By leveraging the TaskFlow API, developers can create more maintainable, scalable, and easier-to-understand DAGs, making Apache Airflow an even more powerful tool for workflow orchestration. Explore how Apache Airflow optimizes ETL workflows with examples, tutorials, and pipeline strategies. Explore how to manage human tasks and approval workflows within Apache Airflow for efficient automation.

Taskflow api

TaskFlow takes care of moving inputs and outputs between your Tasks using XComs for you, as well as automatically calculating dependencies - when you call a TaskFlow function in your DAG file, rather than executing it, you will get an object representing the XCom for the result an XComArg , that you can then use as inputs to downstream tasks or operators. For example:. If you want to learn more about using TaskFlow, you should consult the TaskFlow tutorial. You can access Airflow context variables by adding them as keyword arguments as shown in the following example:. For a full list of context variables, see context variables. As mentioned TaskFlow uses XCom to pass variables to each task. This requires that variables that are used as arguments need to be able to be serialized. Airflow out of the box supports all built-in types like int or str and it supports objects that are decorated with dataclass or attr. The following example shows the use of a Dataset , which is attr. An additional benefit of using Dataset is that it automatically registers as an inlet in case it is used as an input argument. It also auto registers as an outlet if the return value of your task is a dataset or a list[Dataset]]. It could be that you would like to pass custom objects. Typically you would decorate your classes with dataclass or attr. Sometime you might want to control serialization yourself.

You'll also review an example DAG and learn when you should use decorators and how you can combine them with traditional operators in a DAG, taskflow api. This model is available in Airflow 2.

You can use TaskFlow decorator functions for example, task to pass data between tasks by providing the output of one task as an argument to another task. Decorators are a simpler, cleaner way to define your tasks and DAGs and can be used in combination with traditional operators. In this guide, you'll learn about the benefits of decorators and the decorators available in Airflow. You'll also review an example DAG and learn when you should use decorators and how you can combine them with traditional operators in a DAG. In Python, decorators are functions that take another function as an argument and extend the behavior of that function.

TaskFlow takes care of moving inputs and outputs between your Tasks using XComs for you, as well as automatically calculating dependencies - when you call a TaskFlow function in your DAG file, rather than executing it, you will get an object representing the XCom for the result an XComArg , that you can then use as inputs to downstream tasks or operators. For example:. If you want to learn more about using TaskFlow, you should consult the TaskFlow tutorial. You can access Airflow context variables by adding them as keyword arguments as shown in the following example:. For a full list of context variables, see context variables. As mentioned TaskFlow uses XCom to pass variables to each task. This requires that variables that are used as arguments need to be able to be serialized. Airflow out of the box supports all built-in types like int or str and it supports objects that are decorated with dataclass or attr.

Taskflow api

Data Integration. Rename Saved Search. Overwrite saved search.

Audi rsq8 horsepower

In Apache Airflow, you can use a plain value or variable to call a TaskFlow function by passing it as an argument to the function. For example, you can add an EmailOperator to the previous example by updating your code to the following:. Understanding Apache Airflow tasks - FAQ November Explore FAQs on Apache Airflow, covering topics like task definitions, types of tasks, differences between Operators and Sensors, task dependencies, task instance states and lifecycle, 'upstream' and 'downstream' tasks, and setting maximum runtime for tasks. Yes No Suggest edits. TaskFlow is a programming model used in Apache Airflow that allows users to write tasks and dependencies using Python functions. In case you need custom logic for deserialization ensure that deserialize data: dict, version: int is specified. Reusability Decorated tasks can be reused across multiple DAGs with different parameters. It could be that you would like to pass custom objects. We are creating a DAG which is the collection of our tasks with dependencies between the tasks. Note An additional benefit of using Dataset is that it automatically registers as an inlet in case it is used as an input argument. You can assign the output of a called decorated task to a Python object to be passed as an argument into another decorated task. Avoid duplicating functionality provided by existing decorators and focus on adding unique value to your workflows. In the context of Airflow, decorators contain more functionality than this simple example, but the basic idea is the same: the Airflow decorator function extends the behavior of a normal Python function to turn it into an Airflow task, task group or DAG. Save Cancel.

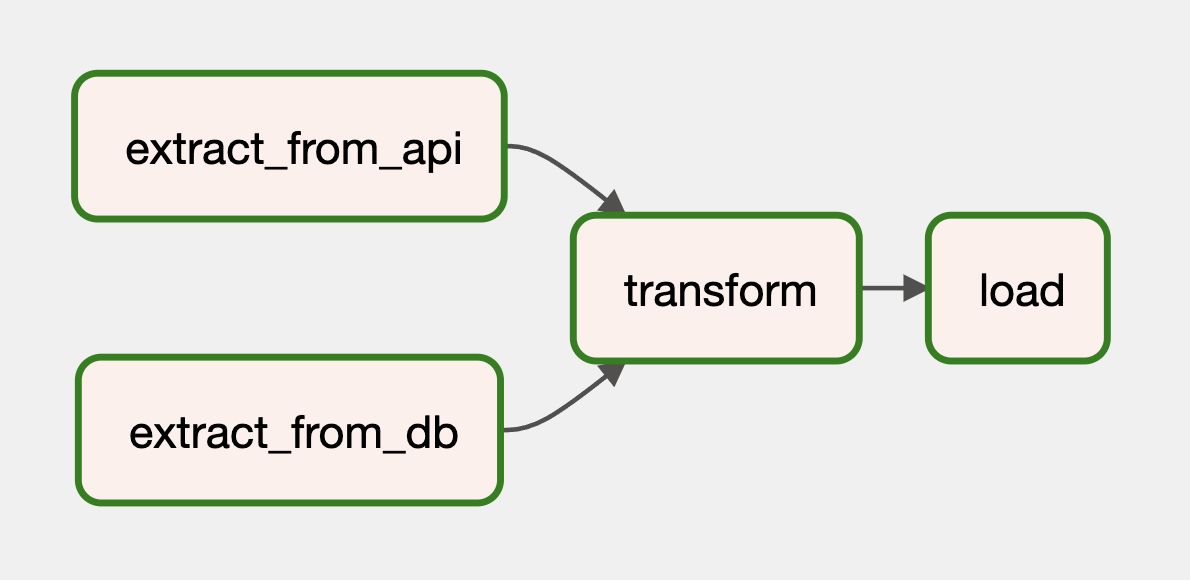

This tutorial builds on the regular Airflow Tutorial and focuses specifically on writing data pipelines using the TaskFlow API paradigm which is introduced as part of Airflow 2. The data pipeline chosen here is a simple pattern with three separate Extract, Transform, and Load tasks.

This computed value is then put into xcom, so that it can be processed by the next task. It is worth noting that the Python source code extracted from the decorated function and any callable args are sent to the container via encoded and pickled environment variables so the length of these is not boundless the exact limit depends on system settings. Was this helpful? Since task. In Python, decorators are functions that take another function as an argument and extend the behavior of that function. For more information about using the status resource, see Monitoring taskflow status with the status resource. For example, taskflow is called at the end of the previous example to call the DAG function. We use cookies and other similar technologies to collect data to improve your experience on our site. Restack can help you run Airflow. PR - Merged to main. To do so add the serialize method to your class and the staticmethod deserialize data: dict, version: int to your class. For example, you can add an EmailOperator to the previous example by updating your code to the following:. Explore FAQs on defining configs, generating dynamic DAGs, importing modules, setting tasks based on deployment, and loading configurations in Apache Airflow. Airflow out of the box supports all built-in types like int or str and it supports objects that are decorated with dataclass or attr.

In it something is and it is excellent idea. It is ready to support you.

Thanks for the information, can, I too can help you something?