Tacotron 2 github

This implementation supports both single- multi-speaker TTS and several techniques to enforce the robustness and efficiency of the model. Unlike many previous implementations, this is kind of a Comprehensive Tacotron2 where the model supports both single- tacotron 2 github, multi-speaker TTS and several techniques such as reduction factor to enforce the robustness of the decoder alignment. The model tacotron 2 github learn alignment only in 5k. Note that only 1 batch size is supported currently due to the autoregressive model architecture.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation.

Tacotron 2 github

Pytorch implementation of Tacotron, a speech synthesis end-to-end generative TTS model. Tacotron 2 - PyTorch implementation with faster-than-realtime inference adapted for brazilian portuguese. Add a description, image, and links to the tacotron-2 topic page so that developers can more easily learn about it. Curate this topic. To associate your repository with the tacotron-2 topic, visit your repo's landing page and select "manage topics. Learn more. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window.

Occasionally, you may see a spike in loss and the model will forget how to attend the alignments will no longer make sense.

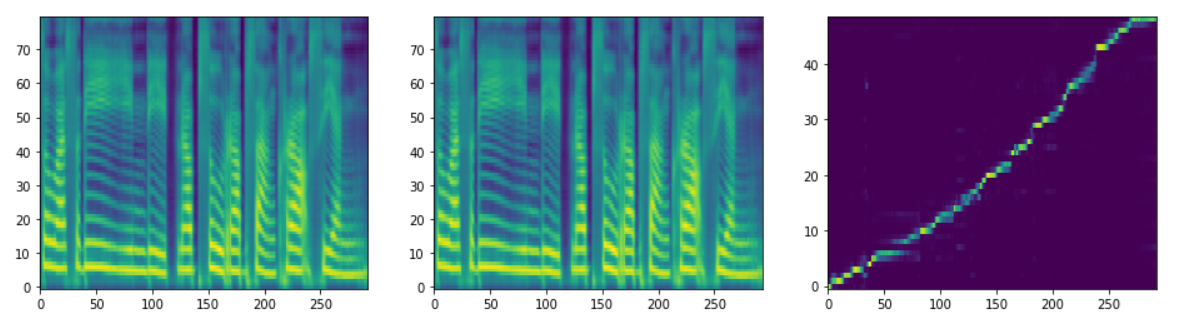

This implementation includes distributed and fp16 support and uses the LJSpeech dataset. Results from Tensorboard while Training:. This does train much faster and better than the normal training, however this may start by overflowing for a few steps, with messages similar to the following, before it starts training correctly:. Below are the inference results after , and steps respectively, for the input text: "You stay in Wonderland and I show you how deep the rabbit hole goes. Around step , is when the network started to construct a proper alignment graph and make understandable sounds. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. Skip to content.

A TensorFlow implementation of Google's Tacotron speech synthesis with pre-trained model unofficial. This can greatly reduce the amount of data required to train a model. In April , Google published a paper, Tacotron: Towards End-to-End Speech Synthesis , where they present a neural text-to-speech model that learns to synthesize speech directly from text, audio pairs. However, they didn't release their source code or training data. This is an independent attempt to provide an open-source implementation of the model described in their paper. The quality isn't as good as Google's demo yet, but hopefully it will get there someday Pull requests are welcome! Install the latest version of TensorFlow for your platform. For better performance, install with GPU support if it's available. This code works with TensorFlow 1.

Tacotron 2 github

While browsing the Internet, I have noticed a large number of people claiming that Tacotron-2 is not reproducible, or that it is not robust enough to work on other datasets than the Google internal speech corpus. Although some open-source works 1 , 2 has proven to give good results with the original Tacotron or even with Wavenet , it still seemed a little harder to reproduce the Tacotron 2 results with high fidelity to the descriptions of Tacotron-2 T2 paper. In this complementary documentation, I will mostly try to cover some ambiguities where understandings might differ and proving in the process that T2 actually works with open source speech corpus like Ljspeech dataset. Also, due to the limitation in size of the paper, authors can't get in much detail so they usually reference to previous works, in this documentation I did the job of extracting the relevant information from the references to make life a bit easier. Last but not least, despite only being released now, this documentation has mostly been written in parallel with development so pardon the disorder, I did my best to make it clear enough. Also feel free to correct any mistakes you might encounter or contribute with any added value experiments results, plots, etc. Skip to content.

Chase sort code

Updated Nov 27, Python. Suggested hparams. Folders and files Name Name Last commit message. Occasionally, you may see a spike in loss and the model will forget how to attend the alignments will no longer make sense. API template for deploying tacotron2 voices. Updated Jul 6, Python. License MIT license. Install requirements: pip install -r requirements. The project is highly based on these. Updated Mar 24, Jupyter Notebook. Notifications Fork 2 Star 3. Language: All Filter by language. Feel free to toy with the parameters as needed.

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. The project is highly based on these.

Curate this topic. Latest commit. Skip to content. The trainer dumps audio and alignments every steps. Dismiss alert. You signed in with another tab or window. Model Description The Tacotron 2 and WaveGlow model form a text-to-speech system that enables user to synthesise a natural sounding speech from raw transcripts without any additional prosody information. Updated Apr 8, Updated Apr 12, Python. Default is Ljspeech. Star 2. You signed in with another tab or window.

I apologise, but, in my opinion, you are not right. I am assured. I can prove it. Write to me in PM, we will communicate.

I apologise, but, in my opinion, you are not right. I suggest it to discuss.