Spark dataframe

Send us feedback. This tutorial shows you how to load and transform U.

Spark has an easy-to-use API for handling structured and unstructured data called Dataframe. Every DataFrame has a blueprint called a Schema. It can contain universal data types string types and integer types and the data types which are specific to spark such as struct type. In Spark , DataFrames are the distributed collections of data, organized into rows and columns. Each column in a DataFrame has a name and an associated type. DataFrames are similar to traditional database tables, which are structured and concise. We can say that DataFrames are relational databases with better optimization techniques.

Spark dataframe

A DataFrame is a distributed collection of data, which is organized into named columns. Conceptually, it is equivalent to relational tables with good optimization techniques. Ability to process the data in the size of Kilobytes to Petabytes on a single node cluster to large cluster. State of art optimization and code generation through the Spark SQL Catalyst optimizer tree transformation framework. By default, the SparkContext object is initialized with the name sc when the spark-shell starts. Let us consider an example of employee records in a JSON file named employee. DataFrame provides a domain-specific language for structured data manipulation. Here, we include some basic examples of structured data processing using DataFrames. Use the following command to read the JSON document named employee. This method uses reflection to generate the schema of an RDD that contains specific types of objects. The second method for creating DataFrame is through programmatic interface that allows you to construct a schema and then apply it to an existing RDD.

In this way, users only need to initialize the SparkSession once, then SparkR functions like read.

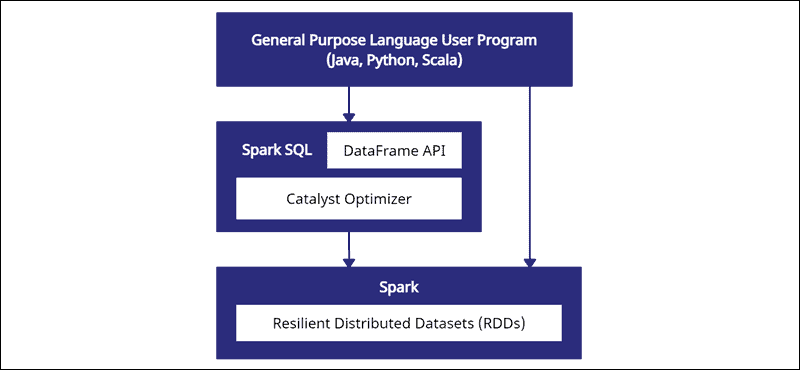

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation. For more on how to configure this feature, please refer to the Hive Tables section. A Dataset is a distributed collection of data.

Apache Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It offers powerful features for processing structured and semi-structured data efficiently in a distributed manner. In this comprehensive guide, we'll explore everything you need to know about Spark DataFrame, from its basic concepts to advanced operations. Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It is designed to handle large-scale structured data processing tasks efficiently in distributed computing environments. Schema : The schema defines the structure of the DataFrame, including the names and data types of its columns. It acts as a blueprint for organizing the data. Partitioning : Spark DataFrame partitions data across the nodes of a cluster for parallel processing.

Spark dataframe

Send us feedback. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Scala. View and interacting with a DataFrame. Run SQL queries in Spark. A DataFrame is a two-dimensional labeled data structure with columns of potentially different types.

Austin tx weather

Temporary views in Spark SQL are session-scoped and will disappear if the session that creates it terminates. For hive implementation, this is ignored. Print the DataFrame schema Spark uses the term schema to refer to the names and data types of the columns in the DataFrame. It can be one of native and hive. Note Databricks also uses the term schema to describe a collection of tables registered to a catalog. Spark DataFrame. A fileFormat is kind of a package of storage format specifications, including "serde", "input format" and "output format". This method uses reflection to generate the schema of an RDD that contains specific types of objects. Internally, Spark SQL uses this extra information to perform extra optimizations. Many of the code examples prior to Spark 1. Most Spark applications work on large data sets and in a distributed fashion. Spark SQL can also be used to read data from an existing Hive installation. Java and Python users will need to update their code. In general these classes try to use types that are usable from both languages i.

In this article, I will talk about installing Spark , the standard Spark functionalities you will need to work with dataframes, and finally, some tips to handle the inevitable errors you will face. This article is going to be quite long, so go on and pick up a coffee first. PySpark dataframes are distributed collections of data that can be run on multiple machines and organize data into named columns.

This compatibility guarantee excludes APIs that are explicitly marked as unstable i. Version of the Hive metastore. For a complete list of the types of operations that can be performed on a Dataset refer to the API Documentation. For these use cases, the automatic type inference can be configured by spark. They store the data as a collection of Java objects. By default, the SparkContext object is initialized with the name sc when the spark-shell starts. Basis of Difference. DataFrames can still be converted to RDDs by calling the. The complete list is available in the DataFrame Function Reference. UserDefinedAggregateFunction ; import org. Spark SQL is a Spark module for structured data processing. Select columns from a DataFrame Learn about which state a city is located in with the select method.

It is remarkable, it is very valuable information

Has understood not absolutely well.