Pyspark withcolumn

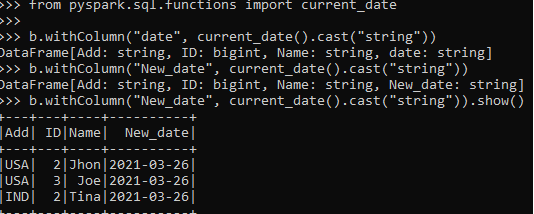

Project Library. Project Path, pyspark withcolumn. In PySpark, the withColumn function is widely used and defined as the transformation function of the DataFrame which is further used to change the value, convert the datatype of an existing column, create the pyspark withcolumn column etc. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function.

The following example shows how to use this syntax in practice. Suppose we have the following PySpark DataFrame that contains information about points scored by basketball players on various teams:. For example, you can use the following syntax to create a new column named rating that returns 1 if the value in the points column is greater than 20 or the 0 otherwise:. We can see that the new rating column now contains either 0 or 1. Note : You can find the complete documentation for the PySpark withColumn function here.

Pyspark withcolumn

PySpark withColumn is a transformation function of DataFrame which is used to change the value, convert the datatype of an existing column, create a new column, and many more. In order to change data type , you would also need to use cast function along with withColumn. The below statement changes the datatype from String to Integer for the salary column. PySpark withColumn function of DataFrame can also be used to change the value of an existing column. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. Note that the second argument should be Column type. In order to create a new column, pass the column name you wanted to the first argument of withColumn transformation function. Make sure this new column not already present on DataFrame, if it presents it updates the value of that column. On below snippet, PySpark lit function is used to add a constant value to a DataFrame column. We can also chain in order to add multiple columns. Though you cannot rename a column using withColumn, still I wanted to cover this as renaming is one of the common operations we perform on DataFrame. To rename an existing column use withColumnRenamed function on DataFrame. Note: Note that all of these functions return the new DataFrame after applying the functions instead of updating DataFrame.

Note that the second argument should be Column type. StreamingContext pyspark. Matplotlib Subplots — How to create multiple plots in same pyspark withcolumn in Python?

It is a DataFrame transformation operation, meaning it returns a new DataFrame with the specified changes, without altering the original DataFrame. Tell us how we can help you? Receive updates on WhatsApp. Get a detailed look at our Data Science course. Full Name. Request A Call Back.

When columns are nested it becomes complicated. Refer to this page, If you are looking for a Spark with Scala example and rename pandas column with examples. Below is our schema structure. I am not printing data here as it is not necessary for our examples. This schema has a nested structure. This is the most straight forward approach; this function takes two parameters; the first is your existing column name and the second is the new column name you wish for. To change multiple column names, we should chain withColumnRenamed functions as shown below. You can also store all columns to rename in a list and loop through to rename all columns, I will leave this to you to explore. Changing a column name on nested data is not straight forward and we can do this by creating a new schema with new DataFrame columns using StructType and use it using cast function as shown below. This statement renames firstname to fname and lastname to lname within name structure.

Pyspark withcolumn

To execute the PySpark withColumn function you must supply two arguments. The first argument is the name of the new or existing column. The second argument is the desired value to be used populate the first argument column. This value can be a constant value, a PySpark column, or a PySpark expression. This will become much more clear when reviewing the code examples below. Overall, the withColumn function is a convenient way to perform transformations on the data within a DataFrame and is widely used in PySpark applications.

Alice green onlyfans

Setup Python environment for ML 3. Detecting defects in Steel sheet with Computer vision Note : You can find the complete documentation for the PySpark withColumn function here. Scalars Foundations of Machine Learning 2. Spark Dataset provides both the type safety and object-oriented programming interface. Get a detailed look at our Data Science course. PySparkException pyspark. GroupedData pyspark. How to detect outliers using IQR and Boxplots?

Returns a new DataFrame by adding a column or replacing the existing column that has the same name. The column expression must be an expression over this DataFrame ; attempting to add a column from some other DataFrame will raise an error. This method introduces a projection internally.

The Datasets concept was launched in the year Generators in Python — How to lazily return values only when needed and save memory? Applying a function to a column we will apply a function to convert the age from years to months from pyspark. The following tutorials explain how to perform other common tasks in PySpark:. Download Materials. SparkSession pyspark. ParseException pyspark. CategoricalIndex pyspark. In this SQL project, you will learn to perform various data wrangling activities on an ecommerce database. PySparkException pyspark. Int64Index pyspark. System of Equations

0 thoughts on “Pyspark withcolumn”