Pyspark where

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be pyspark where with the following tasks:. Create a DataFrame with Python.

DataFrame in PySpark is an two dimensional data structure that will store data in two dimensional format. One dimension refers to a row and second dimension refers to a column, So It will store the data in rows and columns. Let's install pyspark module before going to this. The command to install any module in python is "pip". Steps to create dataframe in PySpark:. We can use relational operators for conditions. In the first output, we are getting the rows from the dataframe where marks are greater than

Pyspark where

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter. Use Column with the condition to filter the rows from DataFrame, using this you can express complex condition by referring column names using dfObject. Same example can also written as below. In order to use this first you need to import from pyspark. You can also filter DataFrame rows by using startswith , endswith and contains methods of Column class. If you have SQL background you must be familiar with like and rlike regex like , PySpark also provides similar methods in Column class to filter similar values using wildcard characters. You can use rlike to filter by checking values case insensitive.

Create a subset DataFrame with the ten cities with the highest population and display the resulting data.

In this article, we are going to see where filter in PySpark Dataframe. Where is a method used to filter the rows from DataFrame based on the given condition. The where method is an alias for the filter method. Both these methods operate exactly the same. We can also apply single and multiple conditions on DataFrame columns using the where method. The following example is to see how to apply a single condition on Dataframe using the where method.

SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark.

Pyspark where

In this tutorial, we will look at how to use the Pyspark where function to filter a Pyspark dataframe with the help of some examples. You can use the Pyspark where method to filter data in a Pyspark dataframe. You can use relational operators, SQL expressions, string functions, lists, etc. Disclaimer: Data Science Parichay is reader supported. When you purchase a course through a link on this site, we may earn a small commission at no additional cost to you. Earned commissions help support this website and its team of writers. For this, we will be using the equality operator.

Astrologyzone com

If you do not have cluster control privileges, you can still complete most of the following steps as long as you have access to a cluster. The following example is to know how to filter Dataframe using the where method with Column condition. In the first output, we will get the rows where values in 'student name' column starts with 'L'. I am new to pyspark and this blog was extremely helpful to understand the concept. Plotly - How to show legend in single-trace scatterplot with plotly express? Note Databricks also uses the term schema to describe a collection of tables registered to a catalog. Complete Tutorials. Suggest changes. Report issue Report. Enter your email address to comment.

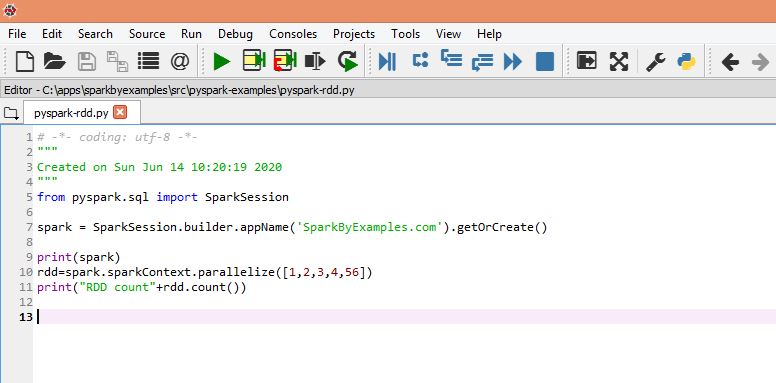

Apache PySpark is a popular open-source distributed data processing engine built on top of the Apache Spark framework.

Checks whether the value contains the character or not. Subset or Filter data with multiple conditions in PySpark. To view the U. What kind of Experience do you want to share? Example Filter multiple conditions df. Save Article Save. Where is a method used to filter the rows from DataFrame based on the given condition. Select columns in PySpark dataframe. Delta Lake splits the Parquet folders and files. Spark writes out a directory of files rather than a single file. Syntax: DataFrame. Interview Experiences.

In my opinion you are mistaken. I can defend the position. Write to me in PM, we will talk.

In it something is. Thanks for an explanation, I too consider, that the easier the better �

Here so history!