Pivot pyspark

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced pivot pyspark, aggregation, or analysis.

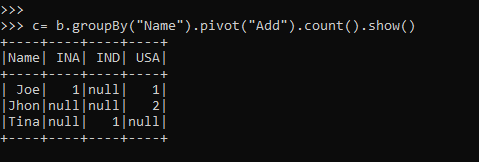

Pivoting is a widely used technique in data analysis, enabling you to transform data from a long format to a wide format by aggregating it based on specific criteria. PySpark, the Python library for Apache Spark, provides a powerful and flexible set of built-in functions for pivoting DataFrames, allowing you to create insightful pivot tables from your big data. In this blog post, we will provide a comprehensive guide on using the pivot function in PySpark DataFrames, covering basic pivot operations, custom aggregations, and pivot table manipulation techniques. To create a pivot table in PySpark, you can use the groupBy and pivot functions in conjunction with an aggregation function like sum , count , or avg. In this example, the groupBy function groups the data by the "GroupColumn" column, and the pivot function pivots the data on the "PivotColumn" column. Finally, the sum function aggregates the data by summing the values in the "ValueColumn" column. When creating a pivot table, you may encounter null values in the pivoted columns.

Pivot pyspark

Pivots a column of the current DataFrame and perform the specified aggregation. There are two versions of the pivot function: one that requires the caller to specify the list of distinct values to pivot on, and one that does not. The latter is more concise but less efficient, because Spark needs to first compute the list of distinct values internally. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark. PandasCogroupedOps pyspark. DataFrameNaFunctions pyspark. DataFrameStatFunctions pyspark. Window pyspark. DataFrameReader pyspark.

Row pyspark. ResourceProfileBuilder pyspark.

Pivot It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. PySpark SQL provides pivot function to rotate the data from one column into multiple columns. It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. To get the total amount exported to each country of each product, will do group by Product , pivot by Country , and the sum of Amount. This will transpose the countries from DataFrame rows into columns and produces the below output. Another approach is to do two-phase aggregation.

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced readability, aggregation, or analysis. The pivot function in PySpark is a method available for GroupedData objects, allowing you to execute a pivot operation on a DataFrame. The general syntax for the pivot function is:. If not specified, all unique values in the pivot column will be used. To utilize the pivot function, you must first group your DataFrame using the groupBy function. Next, you can call the pivot function on the GroupedData object, followed by the aggregation function.

Pivot pyspark

You can use the following syntax to create a pivot table from a PySpark DataFrame:. This particular example creates a pivot table using the team column as the rows, the position column as the columns in the pivot table and the sum of the points column as the values within the pivot table. The following example shows how to use this syntax in practice. Suppose we have the following PySpark DataFrame that contains information about the points scored by various basketball players:. We can use the following syntax to create a pivot table using team as the rows, position as the columns and the sum of points as the values within the pivot table:. The resulting pivot table shows the sum of the points values for each team and position. For example, we could use mean instead of sum :. The resulting pivot table shows the mean of the points values for each team and position. Feel free to use whichever summary metric you would like when creating your own pivot table.

Keflavik nas

Changed in version 3. If there are duplicate entries, you may need to perform an aggregation e. Deploy in AWS Sagemaker Float64Index pyspark. DStream pyspark. PythonException pyspark. UserDefinedFunction pyspark. CategoricalIndex pyspark. So, start refining your pivot skills and unlock the full power of your big data processing tasks with PySpark. You can sort the rows and columns of a pivot table using the orderBy function. StreamingContext pyspark. Linear Regression Algorithm To address these limitations, consider the following best practices: Filter data before pivoting to include only pertinent values. DatetimeIndex pyspark.

Remember me Forgot your password? Lost your password?

T pyspark. In this blog post, we will provide a comprehensive guide on using the pivot function in PySpark DataFrames, covering basic pivot operations, custom aggregations, and pivot table manipulation techniques. Types of Tensors Unpivot is a reverse operation Pivot To unpivot a DataFrame i. Float64Index pyspark. So, start refining your pivot skills and unlock the full power of your big data processing tasks with PySpark. Notice that, unlike pandas raises an ValueError when duplicated values are found. It also supports multi-index and multi-index column. Changed in version 3. Index pyspark. PythonModelWrapper pyspark. UDFRegistration pyspark. AnalysisException pyspark. In this blog, he shares his experiences with the data as he come across.

Certainly. All above told the truth. Let's discuss this question.

You are right, it is exact