Pandas to spark

As a data scientist or software engineer, you may often find yourself working with large datasets that require distributed computing. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart.

Pandas to spark

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark. You need to enable to use of Arrow as this is disabled by default and have Apache Arrow PyArrow install on all Spark cluster nodes using pip install pyspark[sql] or by directly downloading from Apache Arrow for Python. You need to have Spark compatible Apache Arrow installed to use the above statement, In case you have not installed Apache Arrow you get the below error. When an error occurs, Spark automatically fallback to non-Arrow optimization implementation, this can be controlled by spark. In this article, you have learned how easy to convert pandas to Spark DataFrame and optimize the conversion using Apache Arrow in-memory columnar format. Save my name, email, and website in this browser for the next time I comment. Tags: Pandas. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. In this blog, he shares his experiences with the data as he come across. Follow Naveen LinkedIn and Medium.

Article Tags :. Enter your website URL optional. For conversion, we pass the Pandas dataframe into the CreateDataFrame method.

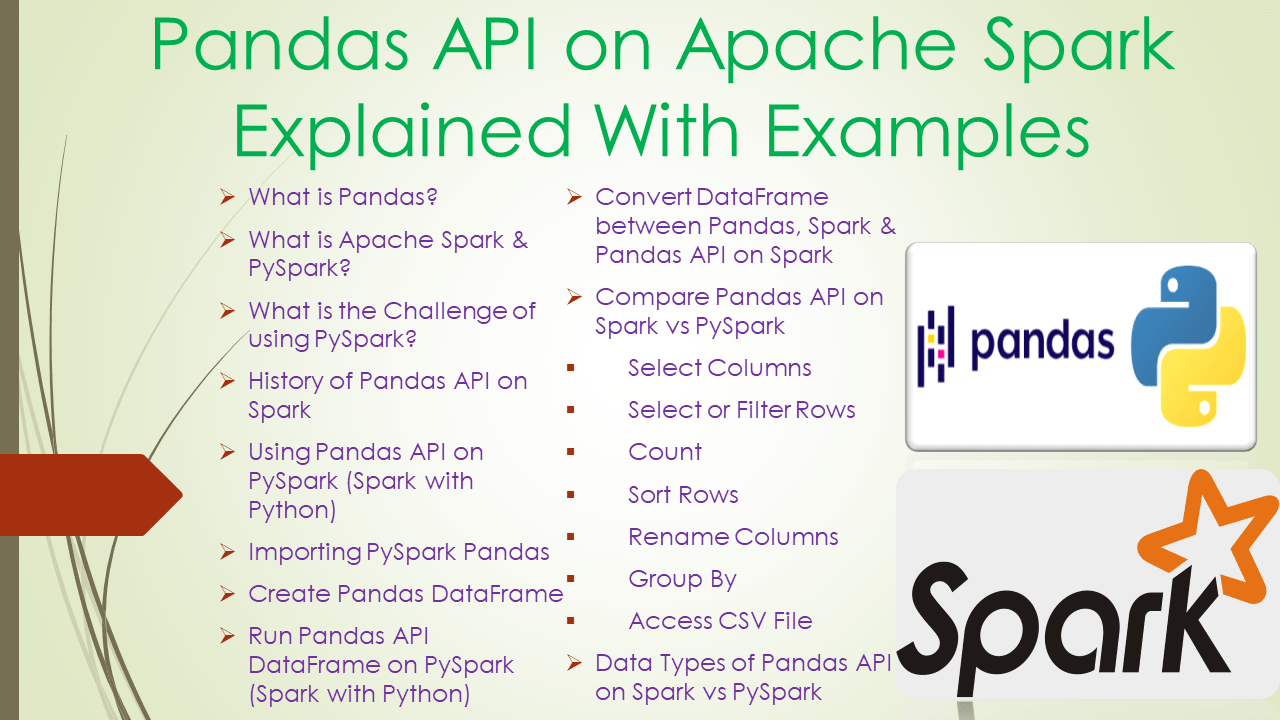

This is a short introduction to pandas API on Spark, geared mainly for new users. This notebook shows you some key differences between pandas and pandas API on Spark. Creating a pandas-on-Spark Series by passing a list of values, letting pandas API on Spark create a default integer index:. Creating a pandas-on-Spark DataFrame by passing a dict of objects that can be converted to series-like. Having specific dtypes. Types that are common to both Spark and pandas are currently supported. Note that the data in a Spark dataframe does not preserve the natural order by default.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart.

Pandas to spark

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark.

Reverso context

For example, you can enable Arrow optimization to hugely speed up internal pandas conversion. For example, we can filter the data using the filter method:. A Pandas DataFrame is a two-dimensional table-like data structure that is used to store and manipulate data in Python. Change Language. Solve Coding Problems. For this, we will use DataFrame. Hire With Us. We can also convert pyspark Dataframe to pandas Dataframe. Convert comma separated string to array in PySpark dataframe. Function head will show only. Pandas DataFrame by toPandas. In addition, optimizations enabled by spark. Example 2: Create a DataFrame and then Convert using spark. Like Article. Syntax: spark.

Send us feedback. This is beneficial to Python developers who work with pandas and NumPy data.

Parallelism : Spark can perform operations on data in parallel, which can significantly improve the performance of data processing tasks. Before running the above code, make sure that you have the Pandas and PySpark libraries installed on your system. It provides a way to interact with Spark using APIs. Convert comma separated string to array in PySpark dataframe. Converting a pandas DataFrame to a PySpark DataFrame can be necessary when you need to scale up your data processing to handle larger datasets. This will show you the schema of the Spark DataFrame, including the data types of each column. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently. You can perform various operations on a Pandas DataFrame, such as filtering, grouping, and aggregation. You can create a SparkSession using the following code:. You can install PySpark using pip:. Additional Information.

0 thoughts on “Pandas to spark”