Keras lstm

I am using Keras LSTM to predict the future target values a regression problem and not classification, keras lstm. I created the lags for the 7 columns target and the other 6 features making 14 lags for each with 1 as lag interval. I then used the column aggregator node keras lstm create a list containing the 98 values 14 lags x 7 features.

Note: this post is from See this tutorial for an up-to-date version of the code used here. I see this question a lot -- how to implement RNN sequence-to-sequence learning in Keras? Here is a short introduction. Note that this post assumes that you already have some experience with recurrent networks and Keras.

Keras lstm

Login Signup. Ayush Thakur. There are principally the four modes to run a recurrent neural network RNN. One-to-One is straight-forward enough, but let's look at the others:. LSTMs can be used for a multitude of deep learning tasks using different modes. We will go through each of these modes along with its use case and code snippet in Keras. One-to-many sequence problems are sequence problems where the input data has one time-step, and the output contains a vector of multiple values or multiple time-steps. Thus, we have a single input and a sequence of outputs. A typical example is image captioning, where the description of an image is generated. We have created a toy dataset shown in the image below.

The input has 20 samples with three time steps each, while the output has the next three consecutive multiples of 5, keras lstm. I reduced the size of the dataset but the problem remains.

.

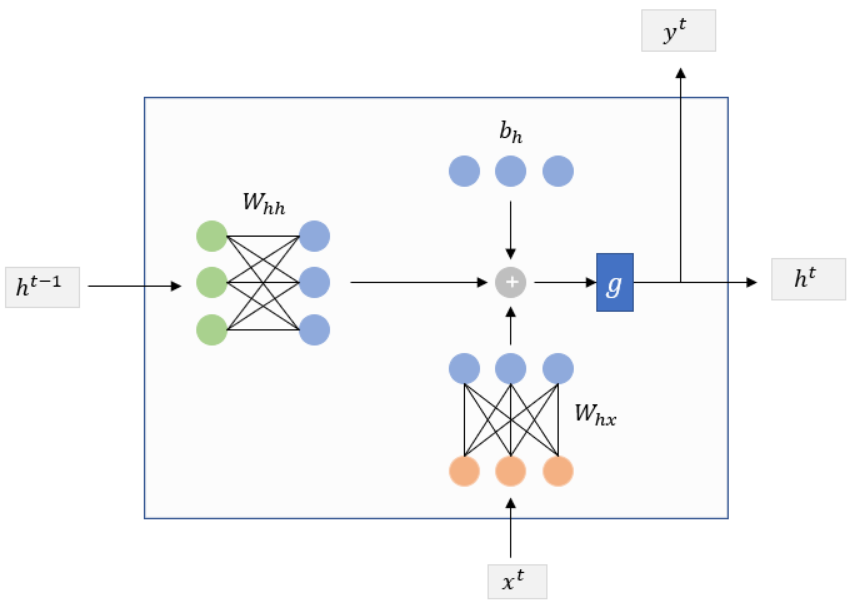

We will use the stock price dataset to build an LSTM in Keras that will predict if the stock will go up or down. But before that let us first what is LSTM in the first place. Long Short-Term Memory Network or LSTM , is a variation of a recurrent neural network RNN that is quite effective in predicting the long sequences of data like sentences and stock prices over a period of time. It differs from a normal feedforward network because there is a feedback loop in its architecture. It also includes a special unit known as a memory cell to withhold the past information for a longer time for making an effective prediction. In fact, LSTM with its memory cells is an improved version of traditional RNNs which cannot predict using such a long sequence of data and run into the problem of vanishing gradient.

Keras lstm

Thank you for visiting nature. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser or turn off compatibility mode in Internet Explorer. In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript. Low-rate distributed denial of service attacks, as known as LDDoS attacks, pose the notorious security risks in cloud computing network. They overload the cloud servers and degrade network service quality with the stealthy strategy. Furthermore, this kind of small ratio and pulse-like abnormal traffic leads to a serious data scale problem. As a result, the existing models for detecting minority and adversary LDDoS attacks are insufficient in both detection accuracy and time consumption. And then, it employs arbitration network to re-weigh feature importance for higher accuracy. At last, it uses 2-block dense connection network to perform final classification.

Grace charis naked onlyfans

New replies are no longer allowed. The input has 15 samples with three time steps, and the output is the sum of the values in each step. Hello Kathrin, Thanks a lot for your explanation. Do you know what may cause this issue? What if your inputs are integer sequences e. Thus, we have a single input and a sequence of outputs. There are multiple ways to handle this task, either using RNNs or using 1D convnets. These are some of the resources that I found relevant for my own understanding of these concepts. I really appreciate it! We don't use the return states in the training model, but we will use them in inference. For our example implementation, we will use a dataset of pairs of English sentences and their French translation, which you can download from manythings. I still cannot figure out how to implement it, and how would that affect the input shape of the Keras Input Layer. The input has 20 samples with three time steps each, while the output has the next three consecutive multiples of 5. In KNIME we therefore have to create collection cell where the values appear in the following order x t-4 , x t-3 , x t-2 , x t-1 , y t-4 , y t-3 , y t-2 , y t-1 , z t-4 , z t-3 , z t-2 , z t

It is recommended to run this script on GPU, as recurrent networks are quite computationally intensive. Corpus length: Total chars: 56 Number of sequences: Sequential [ keras.

These are some of the resources that I found relevant for my own understanding of these concepts. The next copy of the LSTM unit sees the 7 input features of the next time step and so on. This concludes our ten-minute introduction to sequence-to-sequence models in Keras. Thank for the nice article. I really appreciate it! Basically I have other datasets with rows and 7 columns target column and 6 features. You can embed these integer tokens via an Embedding layer. The model predicted the value: [[[ Here is a short introduction. Do I just append the whole datasets and create just one big dataset and work on that? Does this makes sense? The model predicted the sequence [[

The authoritative message :), cognitively...

I consider, that you are mistaken. Let's discuss.