Io confluent kafka serializers kafkaavroserializer

You are viewing documentation for an older version of Confluent Platform. For the latest, click here.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform. Additionally, Schema Registry is extensible to support adding custom schema formats as schema plugins. The serializers can automatically register schemas when serializing a Protobuf message or a JSON-serializable object. The Protobuf serializer can recursively register all imported schemas,. The serializers and deserializers are available in multiple languages, including Java,. NET and Python. Schema Registry supports multiple formats at the same time. For example, you can have Avro schemas in one subject and Protobuf schemas in another. Furthermore, both Protobuf and JSON Schema have their own compatibility rules, so you can have your Protobuf schemas evolve in a backward or forward compatible manner, just as with Avro.

The rules for Avro are detailed in the Avro specification under Schema Resolution.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Whichever method you choose for your application, the most important factor is to ensure that your application is coordinating with Schema Registry to manage schemas and guarantee data compatibility. There are two ways to interact with Kafka: using a native client for your language combined with serializers compatible with Schema Registry, or using the REST Proxy. Most commonly you will use the serializers if your application is developed in a language with supported serializers, whereas you would use the REST Proxy for applications written in other languages. Java applications can use the standard Kafka producers and consumers, but will substitute the default ByteArraySerializer with io. KafkaAvroSerializer and the equivalent deserializer , allowing Avro data to be passed into the producer directly and allowing the consumer to deserialize and return Avro data.

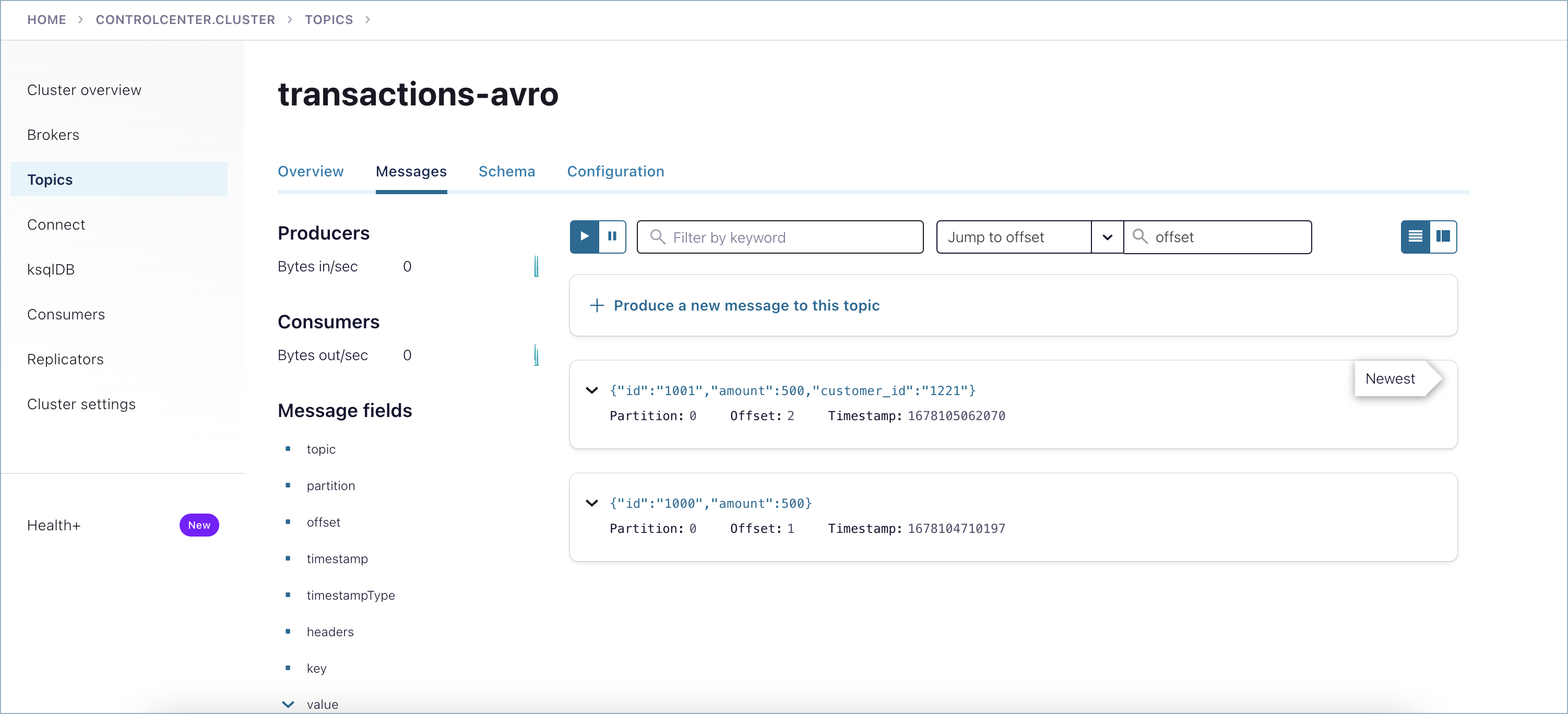

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format. Typically, IndexedRecord is used for the value of the Kafka message. If used, the key of the Kafka message is often one of the primitive types mentioned above. When sending a message to a topic t , the Avro schema for the key and the value will be automatically registered in Schema Registry under the subject t-key and t-value , respectively, if the compatibility test passes. The only exception is that the null type is never registered in Schema Registry.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Whichever method you choose for your application, the most important factor is to ensure that your application is coordinating with Schema Registry to manage schemas and guarantee data compatibility. There are two ways to interact with Kafka: using a native client for your language combined with serializers compatible with Schema Registry, or using the REST Proxy.

Www.rataalada

Confluent Platform versions 5. Within the version specified by the magic byte, the format will never change in any backwards-incompatible way. InputStream; import java. StringDeserializer" ; props. For examples and more about these settings, see Auto Schema Registration in the Schema Registry tutorials. Getting Started What is Confluent Platform? NewDeserializerConfig if err! ConsumerRecords ; import org. Record schema ; avroRecord. The rules for Avro are detailed in the Avro specification under Schema Resolution. Schema Registry supports multiple formats at the same time. StringDeserializer" ; props. Build Design.

Programming in Python.

To ensure stability for clients, Confluent Platform and its serializers ensure the following:. To guarantee stability for clients, Confluent Platform and its serializers ensure the following:. Record schema ; avroRecord. All fields in Protobuf are optional, by default. Payment" ]. Courses What are the courses? Specify how to pick the credentials for Basic authentication header. Build Design. Schema; import org. For example, a financial service that tracks a customer account might include initiating checking and savings, making a deposit, then a withdrawal, applying for a loan, getting approval, and so forth.

You were visited simply with a brilliant idea