Huggingface tokenizers

Big shoutout to rlrs for the fast replace normalizers PR.

Released: Feb 12, View statistics for this project via Libraries. Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Bindings over the Rust implementation. If you are interested in the High-level design, you can go check it there.

Huggingface tokenizers

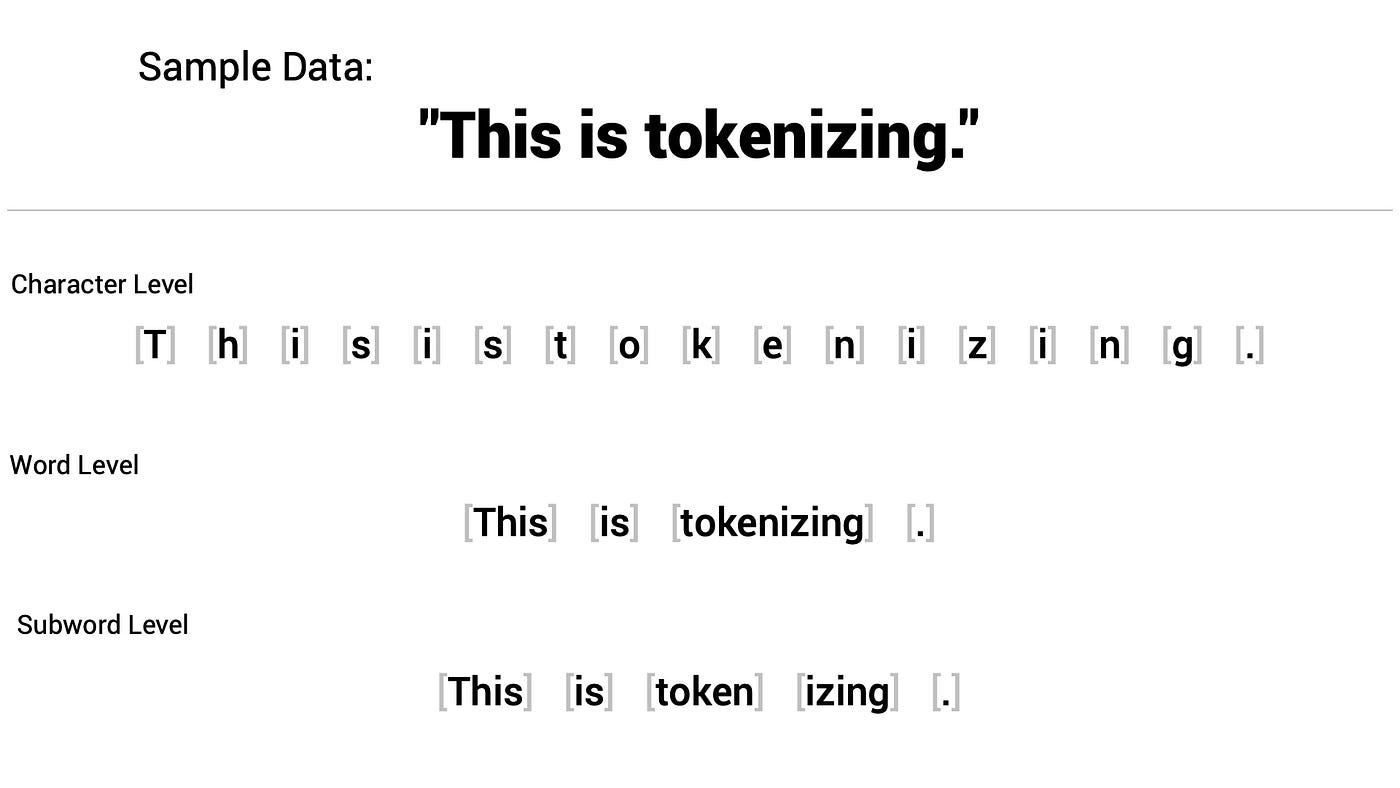

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. In NLP tasks, the data that is generally processed is raw text. However, models can only process numbers, so we need to find a way to convert the raw text to numbers. The goal is to find the most meaningful representation — that is, the one that makes the most sense to the model — and, if possible, the smallest representation. The first type of tokenizer that comes to mind is word-based. For example, in the image below, the goal is to split the raw text into words and find a numerical representation for each of them:. There are different ways to split the text. There are also variations of word tokenizers that have extra rules for punctuation. Each word gets assigned an ID, starting from 0 and going up to the size of the vocabulary. The model uses these IDs to identify each word. Finally, we need a custom token to represent words that are not in our vocabulary. The goal when crafting the vocabulary is to do it in such a way that the tokenizer tokenizes as few words as possible into the unknown token.

Tensortf. As another example, XLNetTokenizer tokenizes our previously exemplary text as follows:.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification. Requires padding to be activated. Acceptable values are:. What are token type IDs?

Huggingface tokenizers

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model.

Reloj withings

Return a list mapping the tokens to their actual word in the initial sentence for a fast tokenizer. The value of this argument defines the number of overlapping tokens. If False , the output will be a string. When adding new tokens to the vocabulary, you should make sure to also resize the token embedding matrix of the model so that its embedding matrix matches the tokenizer. The goal when crafting the vocabulary is to do it in such a way that the tokenizer tokenizes as few words as possible into the unknown token. Jan 22, Define the truncation and the padding strategies for fast tokenizers provided by HuggingFace tokenizers library and restore the tokenizer settings afterwards. AddedToken , optional — A special token separating two different sentences in the same input used by BERT for instance. Thus, the first merge rule the tokenizer learns is to group all "u" symbols followed by a "g" symbol together. Mar 27, Apr 16, Previous Next.

When calling Tokenizer. For the examples that require a Tokenizer we will use the tokenizer we trained in the quicktour , which you can load with:. Common operations include stripping whitespace, removing accented characters or lowercasing all text.

This output is ready to pass to the model, either directly or via methods like generate. So which one to choose? Navigation Project description Release history Download files. Tensor, tf. Special tokens added by the tokenizer are mapped to None and other tokens are mapped to the index of their corresponding word several tokens will be mapped to the same word index if they are parts of that word. In general, transformers models rarely have a vocabulary size greater than 50,, especially if they are pretrained only on a single language. The value of this argument defines the number of overlapping tokens. This is useful when you want to generate a response from the model. Nov 7, As one can see, the rare word "Transformers" has been split into the more frequent subwords "Transform" and "ers". Feb 28, Transformers documentation Summary of the tokenizers.

0 thoughts on “Huggingface tokenizers”