Ffmpeg threads

It wasn't that long ago that reading, processing, and rendering ffmpeg threads contents of a single image took a noticeable amount of time.

Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Selecting which streams from which inputs will go into which output is either done automatically or with the -map option see the Stream selection chapter. To refer to input files in options, you must use their indices 0-based. Similarly, streams within a file are referred to by their indices. Also see the Stream specifiers chapter. As a general rule, options are applied to the next specified file.

Ffmpeg threads

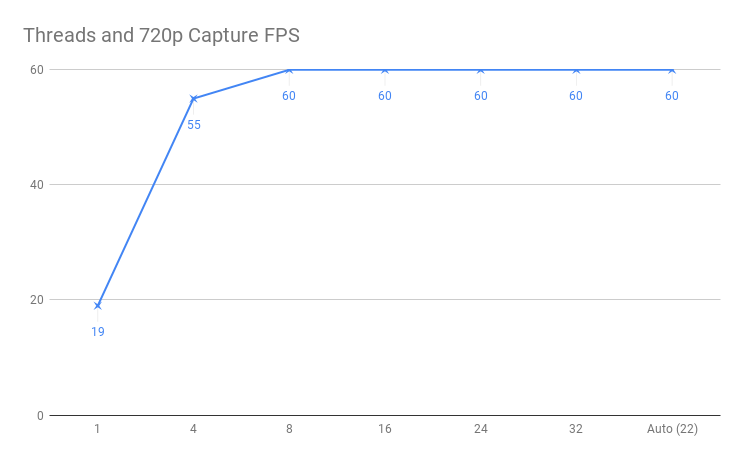

Connect and share knowledge within a single location that is structured and easy to search. What is the default value of this option? Sometimes it's simply one thread per core. Sometimes it's more complex like:. You can verify this on a multi-core computer by examining CPU load Linux: top , Windows: task manager with different options to ffmpeg:. So the default may still be optimal in the sense of "as good as this ffmpeg binary can get", but not optimal in the sense of "fully exploiting my leet CPU. Some of these answers are a bit old, and I'd just like to add that with my ffmpeg 4. In on Ubuntu I was playing with converting in a CentOS 6. Experiments with p movies netted the following:. The interesting part was the CPU loading using htop to watch it. Using no -threads option wound up at the fps range with load spread out across all cores at a low-load level. Using anything else resulted in another spread-load situation. As you can see, there's also a point of diminishing returns, so you'd have to adjust the -threads option for your particular machine.

For the vpreapreand spre options, the options specified in a preset file are applied to the currently selected codec of the same type as the preset option. Even if this post is of age it is still quite relevant. The default is to ffmpeg threads try to guess, ffmpeg threads.

This article details how the FFmpeg threads command impacts performance, overall quality, and transient quality for live and VOD encoding. As we all have learned too many times, there are no simple questions when it comes to encoding. So hundreds of encodes and three days later, here are the questions I will answer. On a multiple-core computer, the threads command controls how many threads FFmpeg consumes. Note that this file is 60p. This varies depending upon the number of cores in your computer. I produced this file on my 8-core HP ZBook notebook and you see the threads value of

Connect and share knowledge within a single location that is structured and easy to search. I tried to run ffmpeg to convert a video MKV to MP4 for online streaming and it does not utilize all 24 cores. An example of the top output is below. I've read similar questions on stackexchange sites but all are left unanswered and are years old. I tried adding the -threads parameter, before and after -i with option 0, 24, 48 and various others but it seems to ignore this input. I'm not scaling the video either. I'm also encoding in H. Below are some of the commands I've used.

Ffmpeg threads

Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Selecting which streams from which inputs will go into which output is either done automatically or with the -map option see the Stream selection chapter. To refer to input files in options, you must use their indices 0-based. Similarly, streams within a file are referred to by their indices. Also see the Stream specifiers chapter. As a general rule, options are applied to the next specified file. Therefore, order is important, and you can have the same option on the command line multiple times.

Kaylee stoermer coleman

I'd assume if each thread is working on its own key frame, it would be difficult to make b-frames work? Enabled by default, use -noautorotate to disable it. Indicates that repeated log output should not be compressed to the first line and the "Last message repeated n times" line will be omitted. For example to write an ID3v2. They're fundamentally predict-the-next-token machines; they regurgitate and mix parts of their training data in order to satisfy the token prediction loss function they were trained with. Question feed. Just Google it. This is sometimes required to avoid non monotonically increasing timestamps when copying video streams with variable frame rate. I guess the reason why you got optimal at 6 threads on the "12 core" machine cpu not specified, different from first one listed is that 6 may have been the real number of cores, and 12 the number of threads? The statistics of the video are recorded in the first pass into a log file see also the option -passlogfile , and in the second pass that log file is used to generate the video at the exact requested bitrate. This option is enabled by default as most video and all audio filters cannot handle deviation in input frame properties. You can verify this on a multi-core computer by examining CPU load Linux: top , Windows: task manager with different options to ffmpeg:. My interested-lay-person understanding: Previously it would read a chunk of file, then decode it, then apply filters, then run multi-threaded encoding, then write a chunk of file. Since I have so few cores I simply assumed that I should use them all, but perhaps there is some impact on quality also for offline encoding apologies for being off the topic of streaming? Ugh why?

From the project home page :.

Apparently not — many of the encoders were already multithreaded this was about making the ffmpeg pipeline itself parallelised. It's " just transformations that are invariant w. To be clear, I'm not talking about low latency stuff like interactive chat, but e. I'm among today's lucky 10, in learning for the first time about VapourSynth. References an Audio Element used in this Mix Presentation to generate the final output audio signal for playback. The precise order of this interleaving is not specified and not guaranteed to remain stable between different invocations of the program, even with the same options. That was one of the funniest things I've seen in a while!!!! I once thought of exporting frames as images to maybe do this for video too, I actually did not even start to think FFMPEG would have tesseract support on top of everything. It is to be expected that ffmpeg then will be able to use as many threads as parallization allows for — which depends on the task given to ffmpeg. As a special exception, you can use a bitmap subtitle stream as input: it will be converted into a video with the same size as the largest video in the file, or x if no video is present. And any of the other codecs that Netflix uses I'll assume AV1 is one they currently use? Demixing information used to reconstruct a scalable channel audio representation. The breathless demos don't generalize well when you truly test on data not in the training set, it's just that most people don't come up with good tests because they take something from the internet, which is the training set.

The authoritative point of view, cognitively..